RNN语言翻译

02 Jun 2018 | deep-learning |语言翻译

这个项目是使用RNN实现英语到法语的翻译。

"""

DON'T MODIFY ANYTHING IN THIS CELL

"""

import helper

import problem_unittests as tests

source_path = 'data/small_vocab_en'

target_path = 'data/small_vocab_fr'

source_text = helper.load_data(source_path)

target_text = helper.load_data(target_path)

查看数据

view_sentence_range = (0, 10)

"""

DON'T MODIFY ANYTHING IN THIS CELL

"""

import numpy as np

print('Dataset Stats')

print('Roughly the number of unique words: {}'.format(len({word: None for word in source_text.split()})))

sentences = source_text.split('\n')

word_counts = [len(sentence.split()) for sentence in sentences]

print('Number of sentences: {}'.format(len(sentences)))

print('Average number of words in a sentence: {}'.format(np.average(word_counts)))

print()

print('English sentences {} to {}:'.format(*))

print('\n'.join(source_text.split('\n')[view_sentence_range[0]:view_sentence_range[1]]))

print()

print('French sentences {} to {}:'.format(*view_sentence_range))

print('\n'.join(target_text.split('\n')[view_sentence_range[0]:view_sentence_range[1]]))

File "<ipython-input-2-f34785c0978f>", line 17

print('English sentences {} to {}:'.format(*))

^

SyntaxError: invalid syntax

预处理函数

文本转ID

与其他RNN一样,我们首先需要把单词转化为计算机能够处理的数值。函数text_to_ids()可以将文本转化为整数id,注意需要在末尾加上<EOS>,让神经网络知道一个句子的结束。

def text_to_ids(source_text, target_text, source_vocab_to_int, target_vocab_to_int):

"""

Convert source and target text to proper word ids

:param source_text: String that contains all the source text.

:param target_text: String that contains all the target text.

:param source_vocab_to_int: Dictionary to go from the source words to an id

:param target_vocab_to_int: Dictionary to go from the target words to an id

:return: A tuple of lists (source_id_text, target_id_text)

"""

# TODO: Implement Function

source_id_text = []

for sentences in source_text.split('\n'):

sentence_id_text = []

for word in sentences.split():

idx = source_vocab_to_int[word]

sentence_id_text.append(idx)

source_id_text.append(sentence_id_text)

target_id_text = []

for sentences in target_text.split('\n'):

sentence_id_text = []

for word in sentences.split():

idx = target_vocab_to_int[word]

sentence_id_text.append(idx)

sentence_id_text.append(target_vocab_to_int['<EOS>'])

target_id_text.append(sentence_id_text)

return (source_id_text, target_id_text)

"""

DON'T MODIFY ANYTHING IN THIS CELL THAT IS BELOW THIS LINE

"""

tests.test_text_to_ids(text_to_ids)

保存处理后的数据

"""

DON'T MODIFY ANYTHING IN THIS CELL

"""

helper.preprocess_and_save_data(source_path, target_path, text_to_ids)

"""

DON'T MODIFY ANYTHING IN THIS CELL

"""

import numpy as np

import helper

import problem_unittests as tests

(source_int_text, target_int_text), (source_vocab_to_int, target_vocab_to_int), _ = helper.load_preprocess()

查看TensorFlow的版本

"""

DON'T MODIFY ANYTHING IN THIS CELL

"""

from distutils.version import LooseVersion

import warnings

import tensorflow as tf

from tensorflow.python.layers.core import Dense

# Check TensorFlow Version

assert LooseVersion(tf.__version__) >= LooseVersion('1.1'), 'Please use TensorFlow version 1.1 or newer'

print('TensorFlow Version: {}'.format(tf.__version__))

# Check for a GPU

if not tf.test.gpu_device_name():

warnings.warn('No GPU found. Please use a GPU to train your neural network.')

else:

print('Default GPU Device: {}'.format(tf.test.gpu_device_name()))

TensorFlow Version: 1.1.0

d:\Anaconda3\envs\tf1.1\lib\site-packages\ipykernel_launcher.py:15: UserWarning: No GPU found. Please use a GPU to train your neural network.

from ipykernel import kernelapp as app

构建神经网络

为了构建序列到序列的神经网络模型,我们需要实现下列模块:

model_inputsprocess_decoder_inputencoding_layerdecoding_layer_traindecoding_layer_inferdecoding_layerseq2seq_model

Input

函数model_inputs()用于创建输入参数。

def model_inputs():

"""

Create TF Placeholders for input, targets, learning rate, and lengths of source and target sequences.

:return: Tuple (input, targets, learning rate, keep probability, target sequence length,

max target sequence length, source sequence length)

"""

# TODO: Implement Function

input_ = tf.placeholder(tf.int32, [None, None], name='input')

targets = tf.placeholder(tf.int32, [None, None], name='targets')

lr = tf.placeholder(tf.float32, name='learning_rate')

keep_prob = tf.placeholder(tf.float32, name='keep_prob')

target_sequence_length = tf.placeholder(tf.int32, (None, ), name='target_sequence_length')

max_target_len = tf.reduce_max(target_sequence_length, name='max_target_len')

source_sequence_length = tf.placeholder(tf.int32, (None, ), name='source_sequence_length')

return input_, targets, lr, keep_prob, target_sequence_length, max_target_len, source_sequence_length

"""

DON'T MODIFY ANYTHING IN THIS CELL THAT IS BELOW THIS LINE

"""

tests.test_model_inputs(model_inputs)

Tests Passed

解码输入

def process_decoder_input(target_data, target_vocab_to_int, batch_size):

"""

Preprocess target data for encoding

:param target_data: Target Placehoder

:param target_vocab_to_int: Dictionary to go from the target words to an id

:param batch_size: Batch Size

:return: Preprocessed target data

"""

# TODO: Implement Function

ending = tf.strided_slice(target_data, [0, 0], [batch_size, -1], [1,1] ) # 移除最后一个id

dec_input = tf.concat([tf.fill([batch_size, 1], target_vocab_to_int['<GO>']), ending ], 1) #添加开始id

return dec_input

"""

DON'T MODIFY ANYTHING IN THIS CELL THAT IS BELOW THIS LINE

"""

tests.test_process_encoding_input(process_decoder_input)

Tests Passed

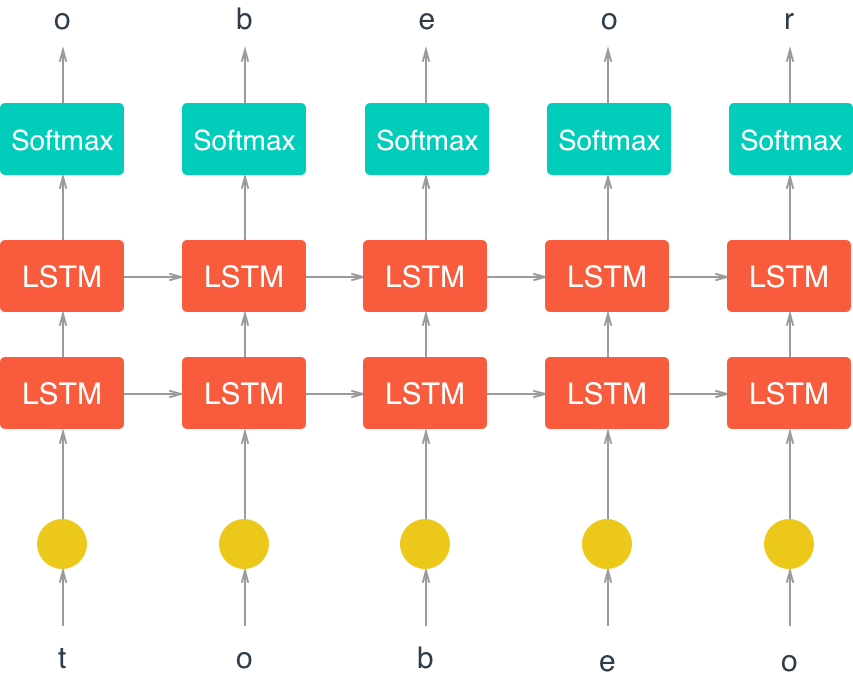

Encoding

函数encoding_layer() 用于创建RNN的编码层:

- 使用

tf.contrib.layers.embed_sequence实现嵌入的编码

from imp import reload

reload(tests)

def encoding_layer(rnn_inputs, rnn_size, num_layers, keep_prob,

source_sequence_length, source_vocab_size,

encoding_embedding_size):

"""

Create encoding layer

:param rnn_inputs: Inputs for the RNN

:param rnn_size: RNN Size

:param num_layers: Number of layers

:param keep_prob: Dropout keep probability

:param source_sequence_length: a list of the lengths of each sequence in the batch

:param source_vocab_size: vocabulary size of source data

:param encoding_embedding_size: embedding size of source data

:return: tuple (RNN output, RNN state)

"""

# TODO: Implement Function

enc_embed_input = tf.contrib.layers.embed_sequence(rnn_inputs, source_vocab_size, encoding_embedding_size)# id to vector

#RNN Cell

def make_cell(rnn_size):

enc_cell = tf.contrib.rnn.LSTMCell(rnn_size, initializer=tf.random_normal_initializer(-0.1, 0.1, seed=2))

enc_cell = tf.contrib.rnn.DropoutWrapper(enc_cell, keep_prob)

return enc_cell

enc_cell = tf.contrib.rnn.MultiRNNCell([make_cell(rnn_size) for _ in range(num_layers)] )

enc_output, enc_state = tf.nn.dynamic_rnn(enc_cell, enc_embed_input, sequence_length=source_sequence_length, dtype=tf.float32)

return enc_output, enc_state

"""

DON'T MODIFY ANYTHING IN THIS CELL THAT IS BELOW THIS LINE

"""

tests.test_encoding_layer(encoding_layer)

Tests Passed

解码-训练

创建训练解码层:

- 训练:

tf.contrib.seq2seq.TrainingHelper - 编码:

tf.contrib.seq2seq.BasicDecoder - 输出:

tf.contrib.seq2seq.dynamic_decode

def decoding_layer_train(encoder_state, dec_cell, dec_embed_input,

target_sequence_length, max_summary_length,

output_layer, keep_prob):

"""

Create a decoding layer for training

:param encoder_state: Encoder State

:param dec_cell: Decoder RNN Cell

:param dec_embed_input: Decoder embedded input

:param target_sequence_length: The lengths of each sequence in the target batch

:param max_summary_length: The length of the longest sequence in the batch

:param output_layer: Function to apply the output layer

:param keep_prob: Dropout keep probability

:return: BasicDecoderOutput containing training logits and sample_id

"""

# TODO: Implement Function

trainHelper = tf.contrib.seq2seq.TrainingHelper(inputs=dec_embed_input, sequence_length=target_sequence_length, time_major=False)

train_decoder = tf.contrib.seq2seq.BasicDecoder(dec_cell, trainHelper, encoder_state, output_layer)

train_decoder_out = tf.contrib.seq2seq.dynamic_decode(train_decoder, impute_finished=True, maximum_iterations=max_summary_length)[0]

return train_decoder_out

"""

DON'T MODIFY ANYTHING IN THIS CELL THAT IS BELOW THIS LINE

"""

tests.test_decoding_layer_train(decoding_layer_train)

Tests Passed

编码-推断

创建推断解码层:

- 输入:

tf.contrib.seq2seq.GreedyEmbeddingHelper - 编码:

tf.contrib.seq2seq.BasicDecoder - 输出:

tf.contrib.seq2seq.dynamic_decode

def decoding_layer_infer(encoder_state, dec_cell, dec_embeddings, start_of_sequence_id,

end_of_sequence_id, max_target_sequence_length,

vocab_size, output_layer, batch_size, keep_prob):

"""

Create a decoding layer for inference

:param encoder_state: Encoder state

:param dec_cell: Decoder RNN Cell

:param dec_embeddings: Decoder embeddings

:param start_of_sequence_id: GO ID

:param end_of_sequence_id: EOS Id

:param max_target_sequence_length: Maximum length of target sequences

:param vocab_size: Size of decoder/target vocabulary

:param decoding_scope: TenorFlow Variable Scope for decoding

:param output_layer: Function to apply the output layer

:param batch_size: Batch size

:param keep_prob: Dropout keep probability

:return: BasicDecoderOutput containing inference logits and sample_id

"""

# TODO: Implement Function

#start_tile = tf.tile(tf.constant(start_of_sequence_id,dtype=tf.int32), [batch_size])

#start_tokens = np.array([start_of_sequence_id] * batch_size)[None:]

start_tokens = tf.tile(tf.constant([start_of_sequence_id], dtype=tf.int32), [batch_size], name='start_tokens')

inference_helper = tf.contrib.seq2seq.GreedyEmbeddingHelper(dec_embeddings, start_tokens, end_of_sequence_id)

inference_decoder = tf.contrib.seq2seq.BasicDecoder(dec_cell, inference_helper, encoder_state, output_layer)

inference_decoder_output = tf.contrib.seq2seq.dynamic_decode(inference_decoder,

impute_finished=True,

maximum_iterations=max_target_sequence_length)[0]

return inference_decoder_output

"""

DON'T MODIFY ANYTHING IN THIS CELL THAT IS BELOW THIS LINE

"""

tests.test_decoding_layer_infer(decoding_layer_infer)

Tests Passed

解码层

函数decoding_layer()创建RNN解码层.

注意: 使用tf.variable_scope在训练与解码是共享变量。

def decoding_layer(dec_input, encoder_state,

target_sequence_length, max_target_sequence_length,

rnn_size,

num_layers, target_vocab_to_int, target_vocab_size,

batch_size, keep_prob, decoding_embedding_size):

"""

Create decoding layer

:param dec_input: Decoder input

:param encoder_state: Encoder state

:param target_sequence_length: The lengths of each sequence in the target batch

:param max_target_sequence_length: Maximum length of target sequences

:param rnn_size: RNN Size

:param num_layers: Number of layers

:param target_vocab_to_int: Dictionary to go from the target words to an id

:param target_vocab_size: Size of target vocabulary

:param batch_size: The size of the batch

:param keep_prob: Dropout keep probability

:param decoding_embedding_size: Decoding embedding size

:return: Tuple of (Training BasicDecoderOutput, Inference BasicDecoderOutput)

"""

# TODO: Implement Function

# 1. building decoder_embedding

dec_embeddings = tf.Variable(tf.random_uniform([target_vocab_size, decoding_embedding_size]))

dec_embed_input = tf.nn.embedding_lookup(dec_embeddings, dec_input)

# 2. construct decoder cell

def make_cell(rnn_size):

dec_cell = tf.contrib.rnn.LSTMCell(rnn_size, initializer=tf.random_uniform_initializer(-0.1, 0.1, seed=2))

dec_cell = tf.contrib.rnn.DropoutWrapper(dec_cell, keep_prob)

return dec_cell

dec_cell = tf.contrib.rnn.MultiRNNCell([make_cell(rnn_size) for _ in range(num_layers)])

# 3. construct output layer

output_layer = Dense(target_vocab_size, kernel_initializer=tf.random_normal_initializer(mean=0.0, stddev=0.1))

# 4 & 5 get (Training BasicDecoderOutput, Inference BasicDecoderOutput)

with tf.variable_scope('decode'):

training_decoder_output = decoding_layer_train(encoder_state, dec_cell, dec_embed_input,

target_sequence_length, max_target_sequence_length, output_layer, keep_prob)

with tf.variable_scope('decode', reuse=True):

inference_decoder_output = decoding_layer_infer(encoder_state, dec_cell, dec_embeddings, target_vocab_to_int['<GO>'],

target_vocab_to_int['<EOS>'], max_target_sequence_length, target_vocab_size, output_layer, batch_size, keep_prob)

return training_decoder_output, inference_decoder_output

"""

DON'T MODIFY ANYTHING IN THIS CELL THAT IS BELOW THIS LINE

"""

tests.test_decoding_layer(decoding_layer)

Tests Passed

构建神经网络

def seq2seq_model(input_data, target_data, keep_prob, batch_size,

source_sequence_length, target_sequence_length,

max_target_sentence_length,

source_vocab_size, target_vocab_size,

enc_embedding_size, dec_embedding_size,

rnn_size, num_layers, target_vocab_to_int):

"""

Build the Sequence-to-Sequence part of the neural network

:param input_data: Input placeholder

:param target_data: Target placeholder

:param keep_prob: Dropout keep probability placeholder

:param batch_size: Batch Size

:param source_sequence_length: Sequence Lengths of source sequences in the batch

:param target_sequence_length: Sequence Lengths of target sequences in the batch

:param source_vocab_size: Source vocabulary size

:param target_vocab_size: Target vocabulary size

:param enc_embedding_size: Encoder embedding size

:param dec_embedding_size: Decoder embedding size

:param rnn_size: RNN Size

:param num_layers: Number of layers

:param target_vocab_to_int: Dictionary to go from the target words to an id

:return: Tuple of (Training BasicDecoderOutput, Inference BasicDecoderOutput)

"""

# TODO: Implement Function

_, enc_state = encoding_layer(input_data, rnn_size, num_layers, keep_prob,

source_sequence_length, source_vocab_size,

enc_embedding_size)

dec_input = process_decoder_input(target_data, target_vocab_to_int, batch_size)

train_decoder_output, inference_decoder_output = decoding_layer(dec_input, enc_state, target_sequence_length,

max_target_sentence_length, rnn_size,num_layers,

target_vocab_to_int, target_vocab_size, batch_size,

keep_prob, dec_embedding_size)

return train_decoder_output, inference_decoder_output

"""

DON'T MODIFY ANYTHING IN THIS CELL THAT IS BELOW THIS LINE

"""

tests.test_seq2seq_model(seq2seq_model)

Tests Passed

训练神经网络

超参数

# Number of Epochs

epochs = 50

# Batch Size

batch_size = 128

# RNN Size

rnn_size = 128

# Number of Layers

num_layers = 2

# Embedding Size

encoding_embedding_size = 200

decoding_embedding_size = 200

# Learning Rate

learning_rate = 0.001

# Dropout Keep Probability

keep_probability = 0.8

display_step = 5

构建图

"""

DON'T MODIFY ANYTHING IN THIS CELL

"""

save_path = 'checkpoints/dev'

(source_int_text, target_int_text), (source_vocab_to_int, target_vocab_to_int), _ = helper.load_preprocess()

max_target_sentence_length = max([len(sentence) for sentence in source_int_text])

train_graph = tf.Graph()

with train_graph.as_default():

input_data, targets, lr, keep_prob, target_sequence_length, max_target_sequence_length, source_sequence_length = model_inputs()

#sequence_length = tf.placeholder_with_default(max_target_sentence_length, None, name='sequence_length')

input_shape = tf.shape(input_data)

train_logits, inference_logits = seq2seq_model(tf.reverse(input_data, [-1]),

targets,

keep_prob,

batch_size,

source_sequence_length,

target_sequence_length,

max_target_sequence_length,

len(source_vocab_to_int),

len(target_vocab_to_int),

encoding_embedding_size,

decoding_embedding_size,

rnn_size,

num_layers,

target_vocab_to_int)

training_logits = tf.identity(train_logits.rnn_output, name='logits')

inference_logits = tf.identity(inference_logits.sample_id, name='predictions')

masks = tf.sequence_mask(target_sequence_length, max_target_sequence_length, dtype=tf.float32, name='masks')

with tf.name_scope("optimization"):

# Loss function

cost = tf.contrib.seq2seq.sequence_loss(

training_logits,

targets,

masks)

# Optimizer

optimizer = tf.train.AdamOptimizer(lr)

# Gradient Clipping

gradients = optimizer.compute_gradients(cost)

capped_gradients = [(tf.clip_by_value(grad, -1., 1.), var) for grad, var in gradients if grad is not None]

train_op = optimizer.apply_gradients(capped_gradients)

"""

DON'T MODIFY ANYTHING IN THIS CELL

"""

def pad_sentence_batch(sentence_batch, pad_int):

"""Pad sentences with <PAD> so that each sentence of a batch has the same length"""

max_sentence = max([len(sentence) for sentence in sentence_batch])

return [sentence + [pad_int] * (max_sentence - len(sentence)) for sentence in sentence_batch]

def get_batches(sources, targets, batch_size, source_pad_int, target_pad_int):

"""Batch targets, sources, and the lengths of their sentences together"""

for batch_i in range(0, len(sources)//batch_size):

start_i = batch_i * batch_size

# Slice the right amount for the batch

sources_batch = sources[start_i:start_i + batch_size]

targets_batch = targets[start_i:start_i + batch_size]

# Pad

pad_sources_batch = np.array(pad_sentence_batch(sources_batch, source_pad_int))

pad_targets_batch = np.array(pad_sentence_batch(targets_batch, target_pad_int))

# Need the lengths for the _lengths parameters

pad_targets_lengths = []

for target in pad_targets_batch:

pad_targets_lengths.append(len(target))

pad_source_lengths = []

for source in pad_sources_batch:

pad_source_lengths.append(len(source))

yield pad_sources_batch, pad_targets_batch, pad_source_lengths, pad_targets_lengths

"""

DON'T MODIFY ANYTHING IN THIS CELL

"""

def get_accuracy(target, logits):

"""

Calculate accuracy

"""

max_seq = max(target.shape[1], logits.shape[1])

if max_seq - target.shape[1]:

target = np.pad(

target,

[(0,0),(0,max_seq - target.shape[1])],

'constant')

if max_seq - logits.shape[1]:

logits = np.pad(

logits,

[(0,0),(0,max_seq - logits.shape[1])],

'constant')

return np.mean(np.equal(target, logits))

# Split data to training and validation sets

train_source = source_int_text[batch_size:]

train_target = target_int_text[batch_size:]

valid_source = source_int_text[:batch_size]

valid_target = target_int_text[:batch_size]

(valid_sources_batch, valid_targets_batch, valid_sources_lengths, valid_targets_lengths ) = next(get_batches(valid_source,

valid_target,

batch_size,

source_vocab_to_int['<PAD>'],

target_vocab_to_int['<PAD>']))

with tf.Session(graph=train_graph) as sess:

sess.run(tf.global_variables_initializer())

for epoch_i in range(epochs):

for batch_i, (source_batch, target_batch, sources_lengths, targets_lengths) in enumerate(

get_batches(train_source, train_target, batch_size,

source_vocab_to_int['<PAD>'],

target_vocab_to_int['<PAD>'])):

_, loss = sess.run(

[train_op, cost],

{input_data: source_batch,

targets: target_batch,

lr: learning_rate,

target_sequence_length: targets_lengths,

source_sequence_length: sources_lengths,

keep_prob: keep_probability})

if batch_i % display_step == 0 and batch_i > 0:

batch_train_logits = sess.run(

inference_logits,

{input_data: source_batch,

source_sequence_length: sources_lengths,

target_sequence_length: targets_lengths,

keep_prob: 1.0})

batch_valid_logits = sess.run(

inference_logits,

{input_data: valid_sources_batch,

source_sequence_length: valid_sources_lengths,

target_sequence_length: valid_targets_lengths,

keep_prob: 1.0})

train_acc = get_accuracy(target_batch, batch_train_logits)

valid_acc = get_accuracy(valid_targets_batch, batch_valid_logits)

print('Epoch {:>3} Batch {:>4}/{} - Train Accuracy: {:>6.4f}, Validation Accuracy: {:>6.4f}, Loss: {:>6.4f}'

.format(epoch_i, batch_i, len(source_int_text) // batch_size, train_acc, valid_acc, loss))

# Save Model

saver = tf.train.Saver()

saver.save(sess, save_path)

print('Model Trained and Saved')

Epoch 0 Batch 5/1077 - Train Accuracy: 0.2605, Validation Accuracy: 0.3050, Loss: 4.8083

Epoch 0 Batch 10/1077 - Train Accuracy: 0.1982, Validation Accuracy: 0.3050, Loss: 4.5230

Epoch 0 Batch 15/1077 - Train Accuracy: 0.2355, Validation Accuracy: 0.3050, Loss: 4.0101

Epoch 0 Batch 20/1077 - Train Accuracy: 0.2676, Validation Accuracy: 0.3349, Loss: 3.7757

Epoch 0 Batch 25/1077 - Train Accuracy: 0.2738, Validation Accuracy: 0.3363, Loss: 3.6803

Epoch 0 Batch 30/1077 - Train Accuracy: 0.2668, Validation Accuracy: 0.3366, Loss: 3.5269

Epoch 0 Batch 35/1077 - Train Accuracy: 0.2840, Validation Accuracy: 0.3452, Loss: 3.4180

Epoch 0 Batch 40/1077 - Train Accuracy: 0.2859, Validation Accuracy: 0.3540, Loss: 3.3374

Epoch 0 Batch 45/1077 - Train Accuracy: 0.2906, Validation Accuracy: 0.3558, Loss: 3.2612

Epoch 0 Batch 50/1077 - Train Accuracy: 0.2734, Validation Accuracy: 0.3398, Loss: 3.2286

Epoch 0 Batch 55/1077 - Train Accuracy: 0.3180, Validation Accuracy: 0.3633, Loss: 3.0543

Epoch 0 Batch 60/1077 - Train Accuracy: 0.3129, Validation Accuracy: 0.3548, Loss: 2.9829

Epoch 0 Batch 65/1077 - Train Accuracy: 0.2467, Validation Accuracy: 0.3452, Loss: 3.2078

Epoch 0 Batch 70/1077 - Train Accuracy: 0.2385, Validation Accuracy: 0.3434, Loss: 3.1128

Epoch 0 Batch 75/1077 - Train Accuracy: 0.2992, Validation Accuracy: 0.3580, Loss: 2.8538

Epoch 0 Batch 80/1077 - Train Accuracy: 0.3152, Validation Accuracy: 0.3757, Loss: 2.8607

Epoch 0 Batch 85/1077 - Train Accuracy: 0.3309, Validation Accuracy: 0.3885, Loss: 2.7357

Epoch 0 Batch 90/1077 - Train Accuracy: 0.3281, Validation Accuracy: 0.4020, Loss: 2.8434

Epoch 0 Batch 95/1077 - Train Accuracy: 0.3575, Validation Accuracy: 0.3981, Loss: 2.6422

Epoch 0 Batch 100/1077 - Train Accuracy: 0.3445, Validation Accuracy: 0.4059, Loss: 2.7220

Epoch 0 Batch 105/1077 - Train Accuracy: 0.3582, Validation Accuracy: 0.4094, Loss: 2.6024

Epoch 0 Batch 110/1077 - Train Accuracy: 0.3855, Validation Accuracy: 0.4240, Loss: 2.5641

Epoch 0 Batch 115/1077 - Train Accuracy: 0.3605, Validation Accuracy: 0.4268, Loss: 2.6026

Epoch 0 Batch 120/1077 - Train Accuracy: 0.3785, Validation Accuracy: 0.4268, Loss: 2.5283

Epoch 0 Batch 125/1077 - Train Accuracy: 0.3865, Validation Accuracy: 0.4414, Loss: 2.4286

Epoch 0 Batch 130/1077 - Train Accuracy: 0.4062, Validation Accuracy: 0.4602, Loss: 2.3033

Epoch 0 Batch 135/1077 - Train Accuracy: 0.3664, Validation Accuracy: 0.4528, Loss: 2.4416

Epoch 0 Batch 140/1077 - Train Accuracy: 0.3363, Validation Accuracy: 0.4318, Loss: 2.4486

Epoch 0 Batch 145/1077 - Train Accuracy: 0.3922, Validation Accuracy: 0.4347, Loss: 2.2174

Epoch 0 Batch 150/1077 - Train Accuracy: 0.3984, Validation Accuracy: 0.4403, Loss: 2.1143

Epoch 0 Batch 155/1077 - Train Accuracy: 0.3762, Validation Accuracy: 0.4389, Loss: 2.1498

Epoch 0 Batch 160/1077 - Train Accuracy: 0.4012, Validation Accuracy: 0.4389, Loss: 2.0739

Epoch 0 Batch 165/1077 - Train Accuracy: 0.3719, Validation Accuracy: 0.4453, Loss: 2.0320

Epoch 0 Batch 170/1077 - Train Accuracy: 0.3695, Validation Accuracy: 0.4457, Loss: 2.0678

Epoch 0 Batch 175/1077 - Train Accuracy: 0.3949, Validation Accuracy: 0.4428, Loss: 1.9046

Epoch 0 Batch 180/1077 - Train Accuracy: 0.4031, Validation Accuracy: 0.4474, Loss: 1.9053

Epoch 0 Batch 185/1077 - Train Accuracy: 0.3648, Validation Accuracy: 0.4421, Loss: 1.8736

Epoch 0 Batch 190/1077 - Train Accuracy: 0.4102, Validation Accuracy: 0.4513, Loss: 1.8367

Epoch 0 Batch 195/1077 - Train Accuracy: 0.3648, Validation Accuracy: 0.4421, Loss: 1.7875

Epoch 0 Batch 200/1077 - Train Accuracy: 0.3961, Validation Accuracy: 0.4471, Loss: 1.7693

Epoch 0 Batch 205/1077 - Train Accuracy: 0.3793, Validation Accuracy: 0.4442, Loss: 1.7260

Epoch 0 Batch 210/1077 - Train Accuracy: 0.3862, Validation Accuracy: 0.4421, Loss: 1.6790

Epoch 0 Batch 215/1077 - Train Accuracy: 0.3828, Validation Accuracy: 0.4460, Loss: 1.6478

Epoch 0 Batch 220/1077 - Train Accuracy: 0.3631, Validation Accuracy: 0.4439, Loss: 1.6524

Epoch 0 Batch 225/1077 - Train Accuracy: 0.3785, Validation Accuracy: 0.4567, Loss: 1.6018

Epoch 0 Batch 230/1077 - Train Accuracy: 0.4271, Validation Accuracy: 0.4418, Loss: 1.4768

Epoch 0 Batch 235/1077 - Train Accuracy: 0.4464, Validation Accuracy: 0.4496, Loss: 1.4412

Epoch 0 Batch 240/1077 - Train Accuracy: 0.4027, Validation Accuracy: 0.4492, Loss: 1.4963

Epoch 0 Batch 245/1077 - Train Accuracy: 0.4208, Validation Accuracy: 0.4570, Loss: 1.4102

Epoch 0 Batch 250/1077 - Train Accuracy: 0.4293, Validation Accuracy: 0.4496, Loss: 1.3399

Epoch 0 Batch 255/1077 - Train Accuracy: 0.3781, Validation Accuracy: 0.4496, Loss: 1.4565

Epoch 0 Batch 260/1077 - Train Accuracy: 0.4252, Validation Accuracy: 0.4570, Loss: 1.3361

Epoch 0 Batch 265/1077 - Train Accuracy: 0.4125, Validation Accuracy: 0.4492, Loss: 1.3508

Epoch 0 Batch 270/1077 - Train Accuracy: 0.3621, Validation Accuracy: 0.4542, Loss: 1.4343

Epoch 0 Batch 275/1077 - Train Accuracy: 0.4483, Validation Accuracy: 0.4609, Loss: 1.2768

Epoch 0 Batch 280/1077 - Train Accuracy: 0.4137, Validation Accuracy: 0.4513, Loss: 1.3412

Epoch 0 Batch 285/1077 - Train Accuracy: 0.4554, Validation Accuracy: 0.4638, Loss: 1.2315

Epoch 0 Batch 290/1077 - Train Accuracy: 0.4156, Validation Accuracy: 0.4542, Loss: 1.3239

Epoch 0 Batch 295/1077 - Train Accuracy: 0.4198, Validation Accuracy: 0.4769, Loss: 1.3635

Epoch 0 Batch 300/1077 - Train Accuracy: 0.3910, Validation Accuracy: 0.4769, Loss: 1.3149

Epoch 0 Batch 305/1077 - Train Accuracy: 0.4355, Validation Accuracy: 0.4691, Loss: 1.2292

Epoch 0 Batch 310/1077 - Train Accuracy: 0.4016, Validation Accuracy: 0.4773, Loss: 1.2079

Epoch 0 Batch 315/1077 - Train Accuracy: 0.4416, Validation Accuracy: 0.4776, Loss: 1.1580

Epoch 0 Batch 320/1077 - Train Accuracy: 0.4359, Validation Accuracy: 0.4826, Loss: 1.2018

Epoch 0 Batch 325/1077 - Train Accuracy: 0.4725, Validation Accuracy: 0.4727, Loss: 1.1155

Epoch 0 Batch 330/1077 - Train Accuracy: 0.4535, Validation Accuracy: 0.4759, Loss: 1.1712

Epoch 0 Batch 335/1077 - Train Accuracy: 0.5104, Validation Accuracy: 0.5025, Loss: 1.0513

Epoch 0 Batch 340/1077 - Train Accuracy: 0.4194, Validation Accuracy: 0.4954, Loss: 1.1615

Epoch 0 Batch 345/1077 - Train Accuracy: 0.4408, Validation Accuracy: 0.4801, Loss: 1.0666

Epoch 0 Batch 350/1077 - Train Accuracy: 0.4066, Validation Accuracy: 0.4936, Loss: 1.1759

Epoch 0 Batch 355/1077 - Train Accuracy: 0.4263, Validation Accuracy: 0.4844, Loss: 1.0969

Epoch 0 Batch 360/1077 - Train Accuracy: 0.4637, Validation Accuracy: 0.5249, Loss: 1.0746

Epoch 0 Batch 365/1077 - Train Accuracy: 0.4512, Validation Accuracy: 0.5241, Loss: 1.0831

Epoch 0 Batch 370/1077 - Train Accuracy: 0.4870, Validation Accuracy: 0.5234, Loss: 1.0119

Epoch 0 Batch 375/1077 - Train Accuracy: 0.5323, Validation Accuracy: 0.5270, Loss: 0.9655

Epoch 0 Batch 380/1077 - Train Accuracy: 0.4953, Validation Accuracy: 0.5412, Loss: 1.0100

Epoch 0 Batch 385/1077 - Train Accuracy: 0.5059, Validation Accuracy: 0.5341, Loss: 1.0293

Epoch 0 Batch 390/1077 - Train Accuracy: 0.4551, Validation Accuracy: 0.5295, Loss: 1.0598

Epoch 0 Batch 395/1077 - Train Accuracy: 0.5115, Validation Accuracy: 0.5277, Loss: 0.9646

Epoch 0 Batch 400/1077 - Train Accuracy: 0.4684, Validation Accuracy: 0.5320, Loss: 0.9979

Epoch 0 Batch 405/1077 - Train Accuracy: 0.4568, Validation Accuracy: 0.5298, Loss: 1.0415

Epoch 0 Batch 410/1077 - Train Accuracy: 0.4470, Validation Accuracy: 0.5366, Loss: 1.0260

Epoch 0 Batch 415/1077 - Train Accuracy: 0.5015, Validation Accuracy: 0.5359, Loss: 0.9450

Epoch 0 Batch 420/1077 - Train Accuracy: 0.4910, Validation Accuracy: 0.5437, Loss: 0.9645

Epoch 0 Batch 425/1077 - Train Accuracy: 0.5446, Validation Accuracy: 0.5394, Loss: 0.9257

Epoch 0 Batch 430/1077 - Train Accuracy: 0.4973, Validation Accuracy: 0.5469, Loss: 0.9471

Epoch 0 Batch 435/1077 - Train Accuracy: 0.4831, Validation Accuracy: 0.5295, Loss: 0.9968

Epoch 0 Batch 440/1077 - Train Accuracy: 0.5207, Validation Accuracy: 0.5518, Loss: 0.9646

Epoch 0 Batch 445/1077 - Train Accuracy: 0.4848, Validation Accuracy: 0.5408, Loss: 0.9771

Epoch 0 Batch 450/1077 - Train Accuracy: 0.4762, Validation Accuracy: 0.5348, Loss: 0.9330

Epoch 0 Batch 455/1077 - Train Accuracy: 0.5298, Validation Accuracy: 0.5504, Loss: 0.8659

Epoch 0 Batch 460/1077 - Train Accuracy: 0.4785, Validation Accuracy: 0.5447, Loss: 0.9152

Epoch 0 Batch 465/1077 - Train Accuracy: 0.4634, Validation Accuracy: 0.5415, Loss: 0.9382

Epoch 0 Batch 470/1077 - Train Accuracy: 0.4683, Validation Accuracy: 0.5387, Loss: 0.9340

Epoch 0 Batch 475/1077 - Train Accuracy: 0.5406, Validation Accuracy: 0.5540, Loss: 0.8482

Epoch 0 Batch 480/1077 - Train Accuracy: 0.4922, Validation Accuracy: 0.5508, Loss: 0.8971

Epoch 0 Batch 485/1077 - Train Accuracy: 0.5547, Validation Accuracy: 0.5490, Loss: 0.8493

Epoch 0 Batch 490/1077 - Train Accuracy: 0.5102, Validation Accuracy: 0.5476, Loss: 0.8771

Epoch 0 Batch 495/1077 - Train Accuracy: 0.5129, Validation Accuracy: 0.5483, Loss: 0.8648

Epoch 0 Batch 500/1077 - Train Accuracy: 0.5238, Validation Accuracy: 0.5451, Loss: 0.8492

Epoch 0 Batch 505/1077 - Train Accuracy: 0.5666, Validation Accuracy: 0.5508, Loss: 0.7669

Epoch 0 Batch 510/1077 - Train Accuracy: 0.5563, Validation Accuracy: 0.5522, Loss: 0.8442

Epoch 0 Batch 515/1077 - Train Accuracy: 0.5289, Validation Accuracy: 0.5543, Loss: 0.8565

Epoch 0 Batch 520/1077 - Train Accuracy: 0.5316, Validation Accuracy: 0.5547, Loss: 0.8016

Epoch 0 Batch 525/1077 - Train Accuracy: 0.5121, Validation Accuracy: 0.5582, Loss: 0.8266

Epoch 0 Batch 530/1077 - Train Accuracy: 0.5242, Validation Accuracy: 0.5600, Loss: 0.8288

Epoch 0 Batch 535/1077 - Train Accuracy: 0.5199, Validation Accuracy: 0.5604, Loss: 0.8232

Epoch 0 Batch 540/1077 - Train Accuracy: 0.5320, Validation Accuracy: 0.5586, Loss: 0.7885

Epoch 0 Batch 545/1077 - Train Accuracy: 0.5289, Validation Accuracy: 0.5607, Loss: 0.8512

Epoch 0 Batch 550/1077 - Train Accuracy: 0.5199, Validation Accuracy: 0.5536, Loss: 0.8394

Epoch 0 Batch 555/1077 - Train Accuracy: 0.5359, Validation Accuracy: 0.5568, Loss: 0.8136

Epoch 0 Batch 560/1077 - Train Accuracy: 0.5402, Validation Accuracy: 0.5604, Loss: 0.7884

Epoch 0 Batch 565/1077 - Train Accuracy: 0.5711, Validation Accuracy: 0.5732, Loss: 0.7767

Epoch 0 Batch 570/1077 - Train Accuracy: 0.5317, Validation Accuracy: 0.5703, Loss: 0.8306

Epoch 0 Batch 575/1077 - Train Accuracy: 0.5406, Validation Accuracy: 0.5827, Loss: 0.7810

Epoch 0 Batch 580/1077 - Train Accuracy: 0.5938, Validation Accuracy: 0.5756, Loss: 0.7210

Epoch 0 Batch 585/1077 - Train Accuracy: 0.5848, Validation Accuracy: 0.5795, Loss: 0.7176

Epoch 0 Batch 590/1077 - Train Accuracy: 0.5206, Validation Accuracy: 0.5717, Loss: 0.8007

Epoch 0 Batch 595/1077 - Train Accuracy: 0.5414, Validation Accuracy: 0.5749, Loss: 0.7814

Epoch 0 Batch 600/1077 - Train Accuracy: 0.5751, Validation Accuracy: 0.5742, Loss: 0.7263

Epoch 0 Batch 605/1077 - Train Accuracy: 0.5177, Validation Accuracy: 0.5788, Loss: 0.8328

Epoch 0 Batch 610/1077 - Train Accuracy: 0.5518, Validation Accuracy: 0.5795, Loss: 0.8061

Epoch 0 Batch 615/1077 - Train Accuracy: 0.5586, Validation Accuracy: 0.5803, Loss: 0.7556

Epoch 0 Batch 620/1077 - Train Accuracy: 0.5473, Validation Accuracy: 0.5838, Loss: 0.7495

Epoch 0 Batch 625/1077 - Train Accuracy: 0.5898, Validation Accuracy: 0.5827, Loss: 0.7442

Epoch 0 Batch 630/1077 - Train Accuracy: 0.5559, Validation Accuracy: 0.5803, Loss: 0.7417

Epoch 0 Batch 635/1077 - Train Accuracy: 0.5362, Validation Accuracy: 0.5810, Loss: 0.8056

Epoch 0 Batch 640/1077 - Train Accuracy: 0.5335, Validation Accuracy: 0.5820, Loss: 0.7234

Epoch 0 Batch 645/1077 - Train Accuracy: 0.5934, Validation Accuracy: 0.5842, Loss: 0.7048

Epoch 0 Batch 650/1077 - Train Accuracy: 0.5277, Validation Accuracy: 0.5870, Loss: 0.7597

Epoch 0 Batch 655/1077 - Train Accuracy: 0.5738, Validation Accuracy: 0.5881, Loss: 0.7522

Epoch 0 Batch 660/1077 - Train Accuracy: 0.5500, Validation Accuracy: 0.5902, Loss: 0.7522

Epoch 0 Batch 665/1077 - Train Accuracy: 0.5586, Validation Accuracy: 0.5831, Loss: 0.7223

Epoch 0 Batch 670/1077 - Train Accuracy: 0.5973, Validation Accuracy: 0.5820, Loss: 0.6779

Epoch 0 Batch 675/1077 - Train Accuracy: 0.5807, Validation Accuracy: 0.5845, Loss: 0.7108

Epoch 0 Batch 680/1077 - Train Accuracy: 0.5781, Validation Accuracy: 0.5845, Loss: 0.6970

Epoch 0 Batch 685/1077 - Train Accuracy: 0.5734, Validation Accuracy: 0.5785, Loss: 0.7288

Epoch 0 Batch 690/1077 - Train Accuracy: 0.5805, Validation Accuracy: 0.5813, Loss: 0.7104

Epoch 0 Batch 695/1077 - Train Accuracy: 0.5914, Validation Accuracy: 0.5952, Loss: 0.6910

Epoch 0 Batch 700/1077 - Train Accuracy: 0.5504, Validation Accuracy: 0.5881, Loss: 0.6965

Epoch 0 Batch 705/1077 - Train Accuracy: 0.5604, Validation Accuracy: 0.5941, Loss: 0.7670

Epoch 0 Batch 710/1077 - Train Accuracy: 0.5535, Validation Accuracy: 0.5881, Loss: 0.7017

Epoch 0 Batch 715/1077 - Train Accuracy: 0.5902, Validation Accuracy: 0.5934, Loss: 0.7112

Epoch 0 Batch 720/1077 - Train Accuracy: 0.5637, Validation Accuracy: 0.5763, Loss: 0.7357

Epoch 0 Batch 725/1077 - Train Accuracy: 0.5539, Validation Accuracy: 0.5916, Loss: 0.6535

Epoch 0 Batch 730/1077 - Train Accuracy: 0.5473, Validation Accuracy: 0.5906, Loss: 0.6991

Epoch 0 Batch 735/1077 - Train Accuracy: 0.5934, Validation Accuracy: 0.5945, Loss: 0.6747

Epoch 0 Batch 740/1077 - Train Accuracy: 0.5855, Validation Accuracy: 0.5906, Loss: 0.6733

Epoch 0 Batch 745/1077 - Train Accuracy: 0.5727, Validation Accuracy: 0.5831, Loss: 0.6784

Epoch 0 Batch 750/1077 - Train Accuracy: 0.5859, Validation Accuracy: 0.5987, Loss: 0.6757

Epoch 0 Batch 755/1077 - Train Accuracy: 0.5668, Validation Accuracy: 0.5952, Loss: 0.6835

Epoch 0 Batch 760/1077 - Train Accuracy: 0.6105, Validation Accuracy: 0.5998, Loss: 0.6823

Epoch 0 Batch 765/1077 - Train Accuracy: 0.5742, Validation Accuracy: 0.5824, Loss: 0.6438

Epoch 0 Batch 770/1077 - Train Accuracy: 0.5911, Validation Accuracy: 0.6040, Loss: 0.6375

Epoch 0 Batch 775/1077 - Train Accuracy: 0.6020, Validation Accuracy: 0.6009, Loss: 0.6641

Epoch 0 Batch 780/1077 - Train Accuracy: 0.5813, Validation Accuracy: 0.6037, Loss: 0.6857

Epoch 0 Batch 785/1077 - Train Accuracy: 0.6127, Validation Accuracy: 0.6005, Loss: 0.6280

Epoch 0 Batch 790/1077 - Train Accuracy: 0.5207, Validation Accuracy: 0.6019, Loss: 0.6873

Epoch 0 Batch 795/1077 - Train Accuracy: 0.5754, Validation Accuracy: 0.5923, Loss: 0.6784

Epoch 0 Batch 800/1077 - Train Accuracy: 0.5422, Validation Accuracy: 0.6080, Loss: 0.6445

Epoch 0 Batch 805/1077 - Train Accuracy: 0.6020, Validation Accuracy: 0.6136, Loss: 0.6446

Epoch 0 Batch 810/1077 - Train Accuracy: 0.6068, Validation Accuracy: 0.6030, Loss: 0.6128

Epoch 0 Batch 815/1077 - Train Accuracy: 0.5734, Validation Accuracy: 0.5987, Loss: 0.6453

Epoch 0 Batch 820/1077 - Train Accuracy: 0.5773, Validation Accuracy: 0.5998, Loss: 0.6690

Epoch 0 Batch 825/1077 - Train Accuracy: 0.5809, Validation Accuracy: 0.6143, Loss: 0.6262

Epoch 0 Batch 830/1077 - Train Accuracy: 0.5820, Validation Accuracy: 0.6158, Loss: 0.6406

Epoch 0 Batch 835/1077 - Train Accuracy: 0.5953, Validation Accuracy: 0.5966, Loss: 0.6416

Epoch 0 Batch 840/1077 - Train Accuracy: 0.6039, Validation Accuracy: 0.6143, Loss: 0.6224

Epoch 0 Batch 845/1077 - Train Accuracy: 0.5914, Validation Accuracy: 0.6087, Loss: 0.6269

Epoch 0 Batch 850/1077 - Train Accuracy: 0.5681, Validation Accuracy: 0.6040, Loss: 0.6682

Epoch 0 Batch 855/1077 - Train Accuracy: 0.5539, Validation Accuracy: 0.6037, Loss: 0.6337

Epoch 0 Batch 860/1077 - Train Accuracy: 0.5900, Validation Accuracy: 0.6090, Loss: 0.6241

Epoch 0 Batch 865/1077 - Train Accuracy: 0.6555, Validation Accuracy: 0.6168, Loss: 0.5708

Epoch 0 Batch 870/1077 - Train Accuracy: 0.5588, Validation Accuracy: 0.6062, Loss: 0.6795

Epoch 0 Batch 875/1077 - Train Accuracy: 0.5977, Validation Accuracy: 0.6033, Loss: 0.6428

Epoch 0 Batch 880/1077 - Train Accuracy: 0.6133, Validation Accuracy: 0.6005, Loss: 0.6240

Epoch 0 Batch 885/1077 - Train Accuracy: 0.6186, Validation Accuracy: 0.5920, Loss: 0.5451

Epoch 0 Batch 890/1077 - Train Accuracy: 0.6696, Validation Accuracy: 0.6033, Loss: 0.5961

Epoch 0 Batch 895/1077 - Train Accuracy: 0.5836, Validation Accuracy: 0.5987, Loss: 0.6172

Epoch 0 Batch 900/1077 - Train Accuracy: 0.6191, Validation Accuracy: 0.6055, Loss: 0.6275

Epoch 0 Batch 905/1077 - Train Accuracy: 0.6316, Validation Accuracy: 0.6083, Loss: 0.6014

Epoch 0 Batch 910/1077 - Train Accuracy: 0.5804, Validation Accuracy: 0.6051, Loss: 0.6173

Epoch 0 Batch 915/1077 - Train Accuracy: 0.5728, Validation Accuracy: 0.6101, Loss: 0.6454

Epoch 0 Batch 920/1077 - Train Accuracy: 0.5914, Validation Accuracy: 0.6229, Loss: 0.6226

Epoch 0 Batch 925/1077 - Train Accuracy: 0.5997, Validation Accuracy: 0.6250, Loss: 0.5980

Epoch 0 Batch 930/1077 - Train Accuracy: 0.6168, Validation Accuracy: 0.6104, Loss: 0.6036

Epoch 0 Batch 935/1077 - Train Accuracy: 0.6098, Validation Accuracy: 0.6101, Loss: 0.6258

Epoch 0 Batch 940/1077 - Train Accuracy: 0.5738, Validation Accuracy: 0.6119, Loss: 0.6155

Epoch 0 Batch 945/1077 - Train Accuracy: 0.6520, Validation Accuracy: 0.6232, Loss: 0.5851

Epoch 0 Batch 950/1077 - Train Accuracy: 0.5893, Validation Accuracy: 0.6300, Loss: 0.5792

Epoch 0 Batch 955/1077 - Train Accuracy: 0.6223, Validation Accuracy: 0.6179, Loss: 0.6139

Epoch 0 Batch 960/1077 - Train Accuracy: 0.6250, Validation Accuracy: 0.6168, Loss: 0.5776

Epoch 0 Batch 965/1077 - Train Accuracy: 0.5312, Validation Accuracy: 0.6115, Loss: 0.6411

Epoch 0 Batch 970/1077 - Train Accuracy: 0.6383, Validation Accuracy: 0.6083, Loss: 0.6053

Epoch 0 Batch 975/1077 - Train Accuracy: 0.6410, Validation Accuracy: 0.6204, Loss: 0.5618

Epoch 0 Batch 980/1077 - Train Accuracy: 0.6121, Validation Accuracy: 0.6168, Loss: 0.6017

Epoch 0 Batch 985/1077 - Train Accuracy: 0.6398, Validation Accuracy: 0.6300, Loss: 0.5913

Epoch 0 Batch 990/1077 - Train Accuracy: 0.6114, Validation Accuracy: 0.6424, Loss: 0.6343

Epoch 0 Batch 995/1077 - Train Accuracy: 0.6451, Validation Accuracy: 0.6293, Loss: 0.5774

Epoch 0 Batch 1000/1077 - Train Accuracy: 0.6384, Validation Accuracy: 0.6200, Loss: 0.5509

Epoch 0 Batch 1005/1077 - Train Accuracy: 0.5863, Validation Accuracy: 0.6232, Loss: 0.5954

Epoch 0 Batch 1010/1077 - Train Accuracy: 0.6141, Validation Accuracy: 0.6218, Loss: 0.5909

Epoch 0 Batch 1015/1077 - Train Accuracy: 0.5906, Validation Accuracy: 0.6218, Loss: 0.6251

Epoch 0 Batch 1020/1077 - Train Accuracy: 0.5809, Validation Accuracy: 0.6158, Loss: 0.5702

Epoch 0 Batch 1025/1077 - Train Accuracy: 0.6112, Validation Accuracy: 0.6207, Loss: 0.5632

Epoch 0 Batch 1030/1077 - Train Accuracy: 0.5926, Validation Accuracy: 0.6296, Loss: 0.5819

Epoch 0 Batch 1035/1077 - Train Accuracy: 0.6161, Validation Accuracy: 0.6310, Loss: 0.5380

Epoch 0 Batch 1040/1077 - Train Accuracy: 0.5863, Validation Accuracy: 0.6179, Loss: 0.6239

Epoch 0 Batch 1045/1077 - Train Accuracy: 0.5766, Validation Accuracy: 0.6183, Loss: 0.6033

Epoch 0 Batch 1050/1077 - Train Accuracy: 0.5496, Validation Accuracy: 0.6158, Loss: 0.5998

Epoch 0 Batch 1055/1077 - Train Accuracy: 0.6477, Validation Accuracy: 0.6218, Loss: 0.5815

Epoch 0 Batch 1060/1077 - Train Accuracy: 0.6156, Validation Accuracy: 0.6200, Loss: 0.5645

Epoch 0 Batch 1065/1077 - Train Accuracy: 0.6109, Validation Accuracy: 0.6200, Loss: 0.5749

Epoch 0 Batch 1070/1077 - Train Accuracy: 0.5746, Validation Accuracy: 0.6307, Loss: 0.5962

Epoch 0 Batch 1075/1077 - Train Accuracy: 0.5822, Validation Accuracy: 0.6076, Loss: 0.6106

Epoch 1 Batch 5/1077 - Train Accuracy: 0.5996, Validation Accuracy: 0.6175, Loss: 0.5985

Epoch 1 Batch 10/1077 - Train Accuracy: 0.6032, Validation Accuracy: 0.6264, Loss: 0.6020

Epoch 1 Batch 15/1077 - Train Accuracy: 0.6258, Validation Accuracy: 0.6261, Loss: 0.5724

Epoch 1 Batch 20/1077 - Train Accuracy: 0.6027, Validation Accuracy: 0.6332, Loss: 0.5607

Epoch 1 Batch 25/1077 - Train Accuracy: 0.6117, Validation Accuracy: 0.6261, Loss: 0.5491

Epoch 1 Batch 30/1077 - Train Accuracy: 0.6246, Validation Accuracy: 0.6300, Loss: 0.5745

Epoch 1 Batch 35/1077 - Train Accuracy: 0.6359, Validation Accuracy: 0.6371, Loss: 0.5738

Epoch 1 Batch 40/1077 - Train Accuracy: 0.6027, Validation Accuracy: 0.6310, Loss: 0.5788

Epoch 1 Batch 45/1077 - Train Accuracy: 0.6199, Validation Accuracy: 0.6300, Loss: 0.5803

Epoch 1 Batch 50/1077 - Train Accuracy: 0.5781, Validation Accuracy: 0.6449, Loss: 0.5687

Epoch 1 Batch 55/1077 - Train Accuracy: 0.6395, Validation Accuracy: 0.6353, Loss: 0.5657

Epoch 1 Batch 60/1077 - Train Accuracy: 0.6220, Validation Accuracy: 0.6257, Loss: 0.5495

Epoch 1 Batch 65/1077 - Train Accuracy: 0.5991, Validation Accuracy: 0.6222, Loss: 0.5902

Epoch 1 Batch 70/1077 - Train Accuracy: 0.5987, Validation Accuracy: 0.6392, Loss: 0.5856

Epoch 1 Batch 75/1077 - Train Accuracy: 0.6145, Validation Accuracy: 0.6222, Loss: 0.5772

Epoch 1 Batch 80/1077 - Train Accuracy: 0.6180, Validation Accuracy: 0.6346, Loss: 0.5648

Epoch 1 Batch 85/1077 - Train Accuracy: 0.6316, Validation Accuracy: 0.6346, Loss: 0.5538

Epoch 1 Batch 90/1077 - Train Accuracy: 0.6008, Validation Accuracy: 0.6271, Loss: 0.5740

Epoch 1 Batch 95/1077 - Train Accuracy: 0.6354, Validation Accuracy: 0.6431, Loss: 0.5806

Epoch 1 Batch 100/1077 - Train Accuracy: 0.6320, Validation Accuracy: 0.6353, Loss: 0.5637

Epoch 1 Batch 105/1077 - Train Accuracy: 0.6344, Validation Accuracy: 0.6364, Loss: 0.5667

Epoch 1 Batch 110/1077 - Train Accuracy: 0.6543, Validation Accuracy: 0.6388, Loss: 0.5296

Epoch 1 Batch 115/1077 - Train Accuracy: 0.6105, Validation Accuracy: 0.6286, Loss: 0.5762

Epoch 1 Batch 120/1077 - Train Accuracy: 0.6215, Validation Accuracy: 0.6410, Loss: 0.5823

Epoch 1 Batch 125/1077 - Train Accuracy: 0.6522, Validation Accuracy: 0.6374, Loss: 0.5353

Epoch 1 Batch 130/1077 - Train Accuracy: 0.6064, Validation Accuracy: 0.6204, Loss: 0.5278

Epoch 1 Batch 135/1077 - Train Accuracy: 0.6098, Validation Accuracy: 0.6193, Loss: 0.5752

Epoch 1 Batch 140/1077 - Train Accuracy: 0.5789, Validation Accuracy: 0.6186, Loss: 0.5785

Epoch 1 Batch 145/1077 - Train Accuracy: 0.6492, Validation Accuracy: 0.6232, Loss: 0.5743

Epoch 1 Batch 150/1077 - Train Accuracy: 0.6425, Validation Accuracy: 0.6310, Loss: 0.5267

Epoch 1 Batch 155/1077 - Train Accuracy: 0.5949, Validation Accuracy: 0.6275, Loss: 0.5566

Epoch 1 Batch 160/1077 - Train Accuracy: 0.6375, Validation Accuracy: 0.6254, Loss: 0.5456

Epoch 1 Batch 165/1077 - Train Accuracy: 0.6008, Validation Accuracy: 0.6303, Loss: 0.5546

Epoch 1 Batch 170/1077 - Train Accuracy: 0.5820, Validation Accuracy: 0.6328, Loss: 0.5766

Epoch 1 Batch 175/1077 - Train Accuracy: 0.6227, Validation Accuracy: 0.6293, Loss: 0.5383

Epoch 1 Batch 180/1077 - Train Accuracy: 0.6141, Validation Accuracy: 0.6271, Loss: 0.5561

Epoch 1 Batch 185/1077 - Train Accuracy: 0.5871, Validation Accuracy: 0.6289, Loss: 0.5799

Epoch 1 Batch 190/1077 - Train Accuracy: 0.6602, Validation Accuracy: 0.6374, Loss: 0.5266

Epoch 1 Batch 195/1077 - Train Accuracy: 0.5848, Validation Accuracy: 0.6232, Loss: 0.5565

Epoch 1 Batch 200/1077 - Train Accuracy: 0.5887, Validation Accuracy: 0.6229, Loss: 0.5677

Epoch 1 Batch 205/1077 - Train Accuracy: 0.6316, Validation Accuracy: 0.6268, Loss: 0.5407

Epoch 1 Batch 210/1077 - Train Accuracy: 0.6417, Validation Accuracy: 0.6193, Loss: 0.5307

Epoch 1 Batch 215/1077 - Train Accuracy: 0.6016, Validation Accuracy: 0.6151, Loss: 0.5620

Epoch 1 Batch 220/1077 - Train Accuracy: 0.6201, Validation Accuracy: 0.6374, Loss: 0.5532

Epoch 1 Batch 225/1077 - Train Accuracy: 0.6168, Validation Accuracy: 0.6378, Loss: 0.5630

Epoch 1 Batch 230/1077 - Train Accuracy: 0.6484, Validation Accuracy: 0.6303, Loss: 0.5334

Epoch 1 Batch 235/1077 - Train Accuracy: 0.6693, Validation Accuracy: 0.6243, Loss: 0.5004

Epoch 1 Batch 240/1077 - Train Accuracy: 0.6324, Validation Accuracy: 0.6158, Loss: 0.5301

Epoch 1 Batch 245/1077 - Train Accuracy: 0.6224, Validation Accuracy: 0.6314, Loss: 0.5168

Epoch 1 Batch 250/1077 - Train Accuracy: 0.5941, Validation Accuracy: 0.6317, Loss: 0.5089

Epoch 1 Batch 255/1077 - Train Accuracy: 0.6117, Validation Accuracy: 0.6236, Loss: 0.5360

Epoch 1 Batch 260/1077 - Train Accuracy: 0.6291, Validation Accuracy: 0.6232, Loss: 0.5047

Epoch 1 Batch 265/1077 - Train Accuracy: 0.5996, Validation Accuracy: 0.6261, Loss: 0.5525

Epoch 1 Batch 270/1077 - Train Accuracy: 0.5949, Validation Accuracy: 0.6300, Loss: 0.5549

Epoch 1 Batch 275/1077 - Train Accuracy: 0.6086, Validation Accuracy: 0.6126, Loss: 0.5160

Epoch 1 Batch 280/1077 - Train Accuracy: 0.6090, Validation Accuracy: 0.6250, Loss: 0.5528

Epoch 1 Batch 285/1077 - Train Accuracy: 0.6399, Validation Accuracy: 0.6250, Loss: 0.5180

Epoch 1 Batch 290/1077 - Train Accuracy: 0.6055, Validation Accuracy: 0.6261, Loss: 0.5638

Epoch 1 Batch 295/1077 - Train Accuracy: 0.6456, Validation Accuracy: 0.6321, Loss: 0.5648

Epoch 1 Batch 300/1077 - Train Accuracy: 0.6205, Validation Accuracy: 0.6332, Loss: 0.5409

Epoch 1 Batch 305/1077 - Train Accuracy: 0.6473, Validation Accuracy: 0.6307, Loss: 0.5153

Epoch 1 Batch 310/1077 - Train Accuracy: 0.5988, Validation Accuracy: 0.6204, Loss: 0.5532

Epoch 1 Batch 315/1077 - Train Accuracy: 0.6343, Validation Accuracy: 0.6214, Loss: 0.4952

Epoch 1 Batch 320/1077 - Train Accuracy: 0.6438, Validation Accuracy: 0.6435, Loss: 0.5247

Epoch 1 Batch 325/1077 - Train Accuracy: 0.6589, Validation Accuracy: 0.6438, Loss: 0.5153

Epoch 1 Batch 330/1077 - Train Accuracy: 0.6469, Validation Accuracy: 0.6193, Loss: 0.5157

Epoch 1 Batch 335/1077 - Train Accuracy: 0.6466, Validation Accuracy: 0.6282, Loss: 0.5103

Epoch 1 Batch 340/1077 - Train Accuracy: 0.5876, Validation Accuracy: 0.6197, Loss: 0.5442

Epoch 1 Batch 345/1077 - Train Accuracy: 0.6559, Validation Accuracy: 0.6243, Loss: 0.5144

Epoch 1 Batch 350/1077 - Train Accuracy: 0.6008, Validation Accuracy: 0.6239, Loss: 0.5370

Epoch 1 Batch 355/1077 - Train Accuracy: 0.6161, Validation Accuracy: 0.6225, Loss: 0.5102

Epoch 1 Batch 360/1077 - Train Accuracy: 0.6191, Validation Accuracy: 0.6371, Loss: 0.5161

Epoch 1 Batch 365/1077 - Train Accuracy: 0.5965, Validation Accuracy: 0.6321, Loss: 0.5296

Epoch 1 Batch 370/1077 - Train Accuracy: 0.6376, Validation Accuracy: 0.6335, Loss: 0.5137

Epoch 1 Batch 375/1077 - Train Accuracy: 0.6598, Validation Accuracy: 0.6491, Loss: 0.4865

Epoch 1 Batch 380/1077 - Train Accuracy: 0.6426, Validation Accuracy: 0.6367, Loss: 0.5186

Epoch 1 Batch 385/1077 - Train Accuracy: 0.6312, Validation Accuracy: 0.6381, Loss: 0.5282

Epoch 1 Batch 390/1077 - Train Accuracy: 0.5754, Validation Accuracy: 0.6317, Loss: 0.5454

Epoch 1 Batch 395/1077 - Train Accuracy: 0.6518, Validation Accuracy: 0.6360, Loss: 0.4965

Epoch 1 Batch 400/1077 - Train Accuracy: 0.6645, Validation Accuracy: 0.6410, Loss: 0.5279

Epoch 1 Batch 405/1077 - Train Accuracy: 0.6209, Validation Accuracy: 0.6385, Loss: 0.5774

Epoch 1 Batch 410/1077 - Train Accuracy: 0.6221, Validation Accuracy: 0.6477, Loss: 0.5409

Epoch 1 Batch 415/1077 - Train Accuracy: 0.6477, Validation Accuracy: 0.6420, Loss: 0.4855

Epoch 1 Batch 420/1077 - Train Accuracy: 0.6020, Validation Accuracy: 0.6303, Loss: 0.5089

Epoch 1 Batch 425/1077 - Train Accuracy: 0.6510, Validation Accuracy: 0.6183, Loss: 0.5038

Epoch 1 Batch 430/1077 - Train Accuracy: 0.6059, Validation Accuracy: 0.6175, Loss: 0.5206

Epoch 1 Batch 435/1077 - Train Accuracy: 0.6353, Validation Accuracy: 0.6488, Loss: 0.5409

Epoch 1 Batch 440/1077 - Train Accuracy: 0.6238, Validation Accuracy: 0.6346, Loss: 0.5324

Epoch 1 Batch 445/1077 - Train Accuracy: 0.5888, Validation Accuracy: 0.6264, Loss: 0.5373

Epoch 1 Batch 450/1077 - Train Accuracy: 0.5879, Validation Accuracy: 0.6371, Loss: 0.4933

Epoch 1 Batch 455/1077 - Train Accuracy: 0.6346, Validation Accuracy: 0.6214, Loss: 0.4949

Epoch 1 Batch 460/1077 - Train Accuracy: 0.6387, Validation Accuracy: 0.6335, Loss: 0.5275

Epoch 1 Batch 465/1077 - Train Accuracy: 0.6414, Validation Accuracy: 0.6332, Loss: 0.5563

Epoch 1 Batch 470/1077 - Train Accuracy: 0.5958, Validation Accuracy: 0.6325, Loss: 0.5396

Epoch 1 Batch 475/1077 - Train Accuracy: 0.6395, Validation Accuracy: 0.6286, Loss: 0.5121

Epoch 1 Batch 480/1077 - Train Accuracy: 0.6517, Validation Accuracy: 0.6360, Loss: 0.5179

Epoch 1 Batch 485/1077 - Train Accuracy: 0.6656, Validation Accuracy: 0.6353, Loss: 0.5270

Epoch 1 Batch 490/1077 - Train Accuracy: 0.6117, Validation Accuracy: 0.6357, Loss: 0.5264

Epoch 1 Batch 495/1077 - Train Accuracy: 0.6234, Validation Accuracy: 0.6317, Loss: 0.4959

Epoch 1 Batch 500/1077 - Train Accuracy: 0.6258, Validation Accuracy: 0.6495, Loss: 0.5059

Epoch 1 Batch 505/1077 - Train Accuracy: 0.6611, Validation Accuracy: 0.6463, Loss: 0.4723

Epoch 1 Batch 510/1077 - Train Accuracy: 0.6305, Validation Accuracy: 0.6388, Loss: 0.5007

Epoch 1 Batch 515/1077 - Train Accuracy: 0.6191, Validation Accuracy: 0.6335, Loss: 0.5261

Epoch 1 Batch 520/1077 - Train Accuracy: 0.6656, Validation Accuracy: 0.6449, Loss: 0.4775

Epoch 1 Batch 525/1077 - Train Accuracy: 0.6438, Validation Accuracy: 0.6445, Loss: 0.5058

Epoch 1 Batch 530/1077 - Train Accuracy: 0.6219, Validation Accuracy: 0.6534, Loss: 0.5169

Epoch 1 Batch 535/1077 - Train Accuracy: 0.6129, Validation Accuracy: 0.6374, Loss: 0.5123

Epoch 1 Batch 540/1077 - Train Accuracy: 0.6422, Validation Accuracy: 0.6424, Loss: 0.4728

Epoch 1 Batch 545/1077 - Train Accuracy: 0.6348, Validation Accuracy: 0.6420, Loss: 0.5339

Epoch 1 Batch 550/1077 - Train Accuracy: 0.6172, Validation Accuracy: 0.6413, Loss: 0.5250

Epoch 1 Batch 555/1077 - Train Accuracy: 0.6484, Validation Accuracy: 0.6357, Loss: 0.5100

Epoch 1 Batch 560/1077 - Train Accuracy: 0.6129, Validation Accuracy: 0.6349, Loss: 0.4940

Epoch 1 Batch 565/1077 - Train Accuracy: 0.6414, Validation Accuracy: 0.6417, Loss: 0.4974

Epoch 1 Batch 570/1077 - Train Accuracy: 0.6386, Validation Accuracy: 0.6399, Loss: 0.5339

Epoch 1 Batch 575/1077 - Train Accuracy: 0.6410, Validation Accuracy: 0.6278, Loss: 0.4950

Epoch 1 Batch 580/1077 - Train Accuracy: 0.6775, Validation Accuracy: 0.6484, Loss: 0.4654

Epoch 1 Batch 585/1077 - Train Accuracy: 0.6722, Validation Accuracy: 0.6442, Loss: 0.4788

Epoch 1 Batch 590/1077 - Train Accuracy: 0.6172, Validation Accuracy: 0.6438, Loss: 0.5276

Epoch 1 Batch 595/1077 - Train Accuracy: 0.6125, Validation Accuracy: 0.6278, Loss: 0.4971

Epoch 1 Batch 600/1077 - Train Accuracy: 0.6678, Validation Accuracy: 0.6388, Loss: 0.4639

Epoch 1 Batch 605/1077 - Train Accuracy: 0.6760, Validation Accuracy: 0.6499, Loss: 0.5144

Epoch 1 Batch 610/1077 - Train Accuracy: 0.6250, Validation Accuracy: 0.6477, Loss: 0.5105

Epoch 1 Batch 615/1077 - Train Accuracy: 0.6570, Validation Accuracy: 0.6484, Loss: 0.4885

Epoch 1 Batch 620/1077 - Train Accuracy: 0.6324, Validation Accuracy: 0.6488, Loss: 0.4772

Epoch 1 Batch 625/1077 - Train Accuracy: 0.6777, Validation Accuracy: 0.6470, Loss: 0.4922

Epoch 1 Batch 630/1077 - Train Accuracy: 0.6418, Validation Accuracy: 0.6470, Loss: 0.4938

Epoch 1 Batch 635/1077 - Train Accuracy: 0.6225, Validation Accuracy: 0.6545, Loss: 0.5189

Epoch 1 Batch 640/1077 - Train Accuracy: 0.6298, Validation Accuracy: 0.6502, Loss: 0.4754

Epoch 1 Batch 645/1077 - Train Accuracy: 0.6633, Validation Accuracy: 0.6403, Loss: 0.4588

Epoch 1 Batch 650/1077 - Train Accuracy: 0.5848, Validation Accuracy: 0.6442, Loss: 0.5034

Epoch 1 Batch 655/1077 - Train Accuracy: 0.6285, Validation Accuracy: 0.6367, Loss: 0.5056

Epoch 1 Batch 660/1077 - Train Accuracy: 0.6398, Validation Accuracy: 0.6438, Loss: 0.5187

Epoch 1 Batch 665/1077 - Train Accuracy: 0.6062, Validation Accuracy: 0.6410, Loss: 0.5000

Epoch 1 Batch 670/1077 - Train Accuracy: 0.6697, Validation Accuracy: 0.6562, Loss: 0.4637

Epoch 1 Batch 675/1077 - Train Accuracy: 0.6499, Validation Accuracy: 0.6619, Loss: 0.4753

Epoch 1 Batch 680/1077 - Train Accuracy: 0.6298, Validation Accuracy: 0.6495, Loss: 0.4741

Epoch 1 Batch 685/1077 - Train Accuracy: 0.6281, Validation Accuracy: 0.6357, Loss: 0.4853

Epoch 1 Batch 690/1077 - Train Accuracy: 0.6430, Validation Accuracy: 0.6406, Loss: 0.4872

Epoch 1 Batch 695/1077 - Train Accuracy: 0.6566, Validation Accuracy: 0.6570, Loss: 0.4714

Epoch 1 Batch 700/1077 - Train Accuracy: 0.6020, Validation Accuracy: 0.6580, Loss: 0.4759

Epoch 1 Batch 705/1077 - Train Accuracy: 0.6682, Validation Accuracy: 0.6392, Loss: 0.5345

Epoch 1 Batch 710/1077 - Train Accuracy: 0.6070, Validation Accuracy: 0.6445, Loss: 0.4869

Epoch 1 Batch 715/1077 - Train Accuracy: 0.6465, Validation Accuracy: 0.6559, Loss: 0.4783

Epoch 1 Batch 720/1077 - Train Accuracy: 0.6562, Validation Accuracy: 0.6598, Loss: 0.5079

Epoch 1 Batch 725/1077 - Train Accuracy: 0.6481, Validation Accuracy: 0.6506, Loss: 0.4606

Epoch 1 Batch 730/1077 - Train Accuracy: 0.6254, Validation Accuracy: 0.6360, Loss: 0.4844

Epoch 1 Batch 735/1077 - Train Accuracy: 0.6242, Validation Accuracy: 0.6467, Loss: 0.4680

Epoch 1 Batch 740/1077 - Train Accuracy: 0.6520, Validation Accuracy: 0.6534, Loss: 0.4687

Epoch 1 Batch 745/1077 - Train Accuracy: 0.6727, Validation Accuracy: 0.6474, Loss: 0.4603

Epoch 1 Batch 750/1077 - Train Accuracy: 0.6570, Validation Accuracy: 0.6602, Loss: 0.4755

Epoch 1 Batch 755/1077 - Train Accuracy: 0.6430, Validation Accuracy: 0.6612, Loss: 0.4838

Epoch 1 Batch 760/1077 - Train Accuracy: 0.6516, Validation Accuracy: 0.6591, Loss: 0.4726

Epoch 1 Batch 765/1077 - Train Accuracy: 0.6641, Validation Accuracy: 0.6619, Loss: 0.4555

Epoch 1 Batch 770/1077 - Train Accuracy: 0.6380, Validation Accuracy: 0.6527, Loss: 0.4489

Epoch 1 Batch 775/1077 - Train Accuracy: 0.6480, Validation Accuracy: 0.6598, Loss: 0.4614

Epoch 1 Batch 780/1077 - Train Accuracy: 0.6570, Validation Accuracy: 0.6651, Loss: 0.4792

Epoch 1 Batch 785/1077 - Train Accuracy: 0.6775, Validation Accuracy: 0.6602, Loss: 0.4533

Epoch 1 Batch 790/1077 - Train Accuracy: 0.5867, Validation Accuracy: 0.6651, Loss: 0.4935

Epoch 1 Batch 795/1077 - Train Accuracy: 0.6531, Validation Accuracy: 0.6562, Loss: 0.4834

Epoch 1 Batch 800/1077 - Train Accuracy: 0.6035, Validation Accuracy: 0.6566, Loss: 0.4692

Epoch 1 Batch 805/1077 - Train Accuracy: 0.6805, Validation Accuracy: 0.6626, Loss: 0.4646

Epoch 1 Batch 810/1077 - Train Accuracy: 0.6559, Validation Accuracy: 0.6488, Loss: 0.4372

Epoch 1 Batch 815/1077 - Train Accuracy: 0.6281, Validation Accuracy: 0.6523, Loss: 0.4664

Epoch 1 Batch 820/1077 - Train Accuracy: 0.6387, Validation Accuracy: 0.6520, Loss: 0.4948

Epoch 1 Batch 825/1077 - Train Accuracy: 0.6312, Validation Accuracy: 0.6467, Loss: 0.4574

Epoch 1 Batch 830/1077 - Train Accuracy: 0.6441, Validation Accuracy: 0.6570, Loss: 0.4667

Epoch 1 Batch 835/1077 - Train Accuracy: 0.6711, Validation Accuracy: 0.6673, Loss: 0.4413

Epoch 1 Batch 840/1077 - Train Accuracy: 0.6527, Validation Accuracy: 0.6680, Loss: 0.4422

Epoch 1 Batch 845/1077 - Train Accuracy: 0.6500, Validation Accuracy: 0.6641, Loss: 0.4607

Epoch 1 Batch 850/1077 - Train Accuracy: 0.6202, Validation Accuracy: 0.6612, Loss: 0.4880

Epoch 1 Batch 855/1077 - Train Accuracy: 0.6348, Validation Accuracy: 0.6570, Loss: 0.4619

---------------------------------------------------------------------------

KeyboardInterrupt Traceback (most recent call last)

<ipython-input-50-2fda6f94fad5> in <module>()

46 target_sequence_length: targets_lengths,

47 source_sequence_length: sources_lengths,

---> 48 keep_prob: keep_probability})

49

50

d:\Anaconda3\envs\tf1.1\lib\site-packages\tensorflow\python\client\session.py in run(self, fetches, feed_dict, options, run_metadata)

776 try:

777 result = self._run(None, fetches, feed_dict, options_ptr,

--> 778 run_metadata_ptr)

779 if run_metadata:

780 proto_data = tf_session.TF_GetBuffer(run_metadata_ptr)

d:\Anaconda3\envs\tf1.1\lib\site-packages\tensorflow\python\client\session.py in _run(self, handle, fetches, feed_dict, options, run_metadata)

980 if final_fetches or final_targets:

981 results = self._do_run(handle, final_targets, final_fetches,

--> 982 feed_dict_string, options, run_metadata)

983 else:

984 results = []

d:\Anaconda3\envs\tf1.1\lib\site-packages\tensorflow\python\client\session.py in _do_run(self, handle, target_list, fetch_list, feed_dict, options, run_metadata)

1030 if handle is None:

1031 return self._do_call(_run_fn, self._session, feed_dict, fetch_list,

-> 1032 target_list, options, run_metadata)

1033 else:

1034 return self._do_call(_prun_fn, self._session, handle, feed_dict,

d:\Anaconda3\envs\tf1.1\lib\site-packages\tensorflow\python\client\session.py in _do_call(self, fn, *args)

1037 def _do_call(self, fn, *args):

1038 try:

-> 1039 return fn(*args)

1040 except errors.OpError as e:

1041 message = compat.as_text(e.message)

d:\Anaconda3\envs\tf1.1\lib\site-packages\tensorflow\python\client\session.py in _run_fn(session, feed_dict, fetch_list, target_list, options, run_metadata)

1019 return tf_session.TF_Run(session, options,

1020 feed_dict, fetch_list, target_list,

-> 1021 status, run_metadata)

1022

1023 def _prun_fn(session, handle, feed_dict, fetch_list):

KeyboardInterrupt:

保存参数

"""

DON'T MODIFY ANYTHING IN THIS CELL

"""

# Save parameters for checkpoint

helper.save_params(save_path)

"""

DON'T MODIFY ANYTHING IN THIS CELL

"""

import tensorflow as tf

import numpy as np

import helper

import problem_unittests as tests

_, (source_vocab_to_int, target_vocab_to_int), (source_int_to_vocab, target_int_to_vocab) = helper.load_preprocess()

load_path = helper.load_params()

句子转换为序列

def sentence_to_seq(sentence, vocab_to_int):

"""

Convert a sentence to a sequence of ids

:param sentence: String

:param vocab_to_int: Dictionary to go from the words to an id

:return: List of word ids

"""

# TODO: Implement Function

ids = []

for word in sentence.split():

idx = vocab_to_int.get(word, vocab_to_int['<UNK>'])

ids.append(idx)

return ids

"""

DON'T MODIFY ANYTHING IN THIS CELL THAT IS BELOW THIS LINE

"""

tests.test_sentence_to_seq(sentence_to_seq)

利用训练好的网络翻译一个英语句子。

translate_sentence = 'he saw a old yellow truck .'

"""

DON'T MODIFY ANYTHING IN THIS CELL

"""

translate_sentence = sentence_to_seq(translate_sentence, source_vocab_to_int)

loaded_graph = tf.Graph()

with tf.Session(graph=loaded_graph) as sess:

# Load saved model

loader = tf.train.import_meta_graph(load_path + '.meta')

loader.restore(sess, load_path)

input_data = loaded_graph.get_tensor_by_name('input:0')

logits = loaded_graph.get_tensor_by_name('predictions:0')

target_sequence_length = loaded_graph.get_tensor_by_name('target_sequence_length:0')

source_sequence_length = loaded_graph.get_tensor_by_name('source_sequence_length:0')

keep_prob = loaded_graph.get_tensor_by_name('keep_prob:0')

translate_logits = sess.run(logits, {input_data: [translate_sentence]*batch_size,

target_sequence_length: [len(translate_sentence)*2]*batch_size,

source_sequence_length: [len(translate_sentence)]*batch_size,

keep_prob: 1.0})[0]

print('Input')

print(' Word Ids: {}'.format([i for i in translate_sentence]))

print(' English Words: {}'.format([source_int_to_vocab[i] for i in translate_sentence]))

print('\nPrediction')

print(' Word Ids: {}'.format([i for i in translate_logits]))

print(' French Words: {}'.format(" ".join([target_int_to_vocab[i] for i in translate_logits])))

要想得到更好的结果,需要更多的训练数据集,你可以使用这个 WMT10 French-English corpus,但是这也将会花费更长的时间。