基于深度学习的关系抽取文章梳理

15 Jul 2018 | relation-extraction |这篇文章主要梳理了基于深度学习的关系抽取的研究进展,列出了主要的文章,后续会再慢慢解读。

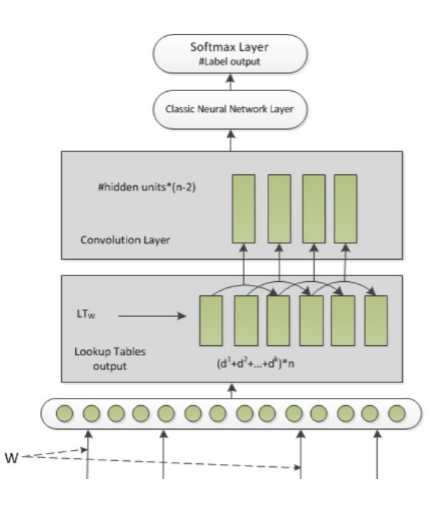

1 CNN:这篇文章是第一次使用CNN来做关系分类,使用的CNN结构也十分简单【[1] Liu C Y, Sun W B, Chao W H, et al. Convolution Neural Network for Relation Extraction[C]// International Conference on Advanced Data Mining and Applications. Springer, Berlin, Heidelberg, 2013:231-242. 】。

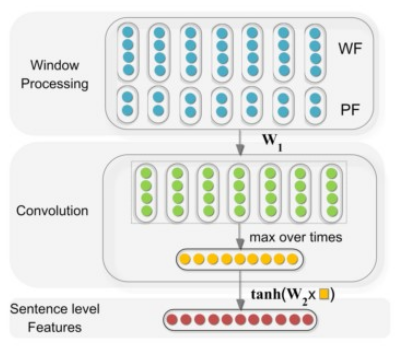

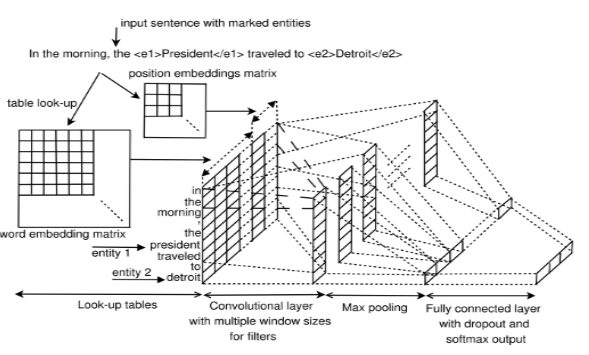

2 CNN+POOling:这篇文章使用了比较经典的CNN的结构,包含了Pooling层,以及设计了Position Features。【Zeng, D & Liu, K & Lai, S & Zhou, G & Zhao, J. (2014). Relation classification via convolutional deep neural network. the 25th International Conference on Computational Linguistics: Technical Papers. 2335-2344. 】

3 Multi-sized CNN :在卷积阶段使用多个尺寸的卷积核【Nguyen T H, Grishman R. Relation Extraction: Perspective from Convolutional Neural Networks[C]// The Workshop on Vector Space Modeling for Natural Language Processing. 2015:39-48. 】

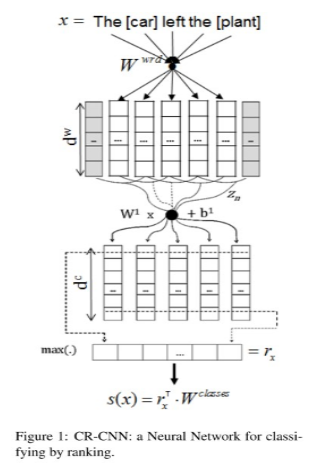

4 CR-CNN:使用新的损失函数,不再使用softmax+cross-entropy的方式【Dos Santos, Cicero & Xiang, Bing & Zhou, Bowen. (2015). Classifying Relations by Ranking with Convolutional Neural Networks. Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing. 1. 10.3115/v1/P15-1061. 】

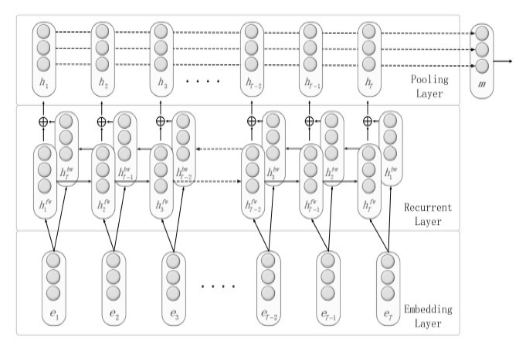

5 RNN:这篇文章不再使用CNN作为基本结构,而是开始尝试RNN。【Zhang D, Wang D. Relation Classification via Recurrent Neural Network[J]. Computer Science, 2015. 】

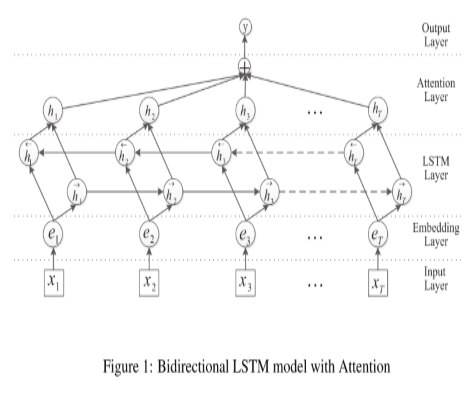

6 BiLSTM Attention:这篇文章是基于RNN对句子建模,在上一篇RNN的基础上 做了一点改进,使用标准的的Attention + BiLSTM【Zhou P, Shi W, Tian J, et al. Attention-Based Bidirectional Long Short-Term Memory Networks for Relation Classification[C]// Meeting of the Association for Computational Linguistics. 2016:207-212. 】

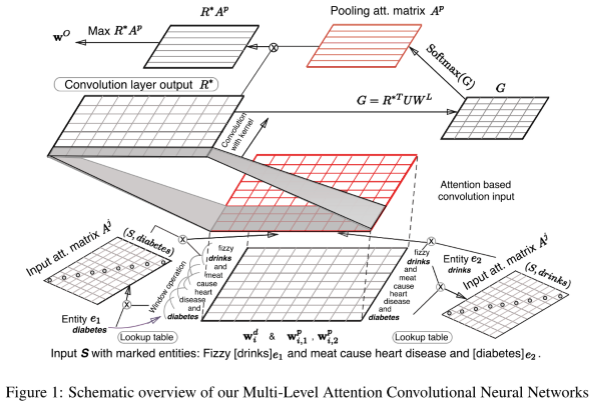

7 Multi-Level Attention CNN:文中设计了相对复杂的两层Attention机制。【Wang L, Cao Z, Melo G D, et al. Relation Classification via Multi-Level Attention CNNs[C]// Meeting of the Association for Computational Linguistics. 2016:1298-1307. 】

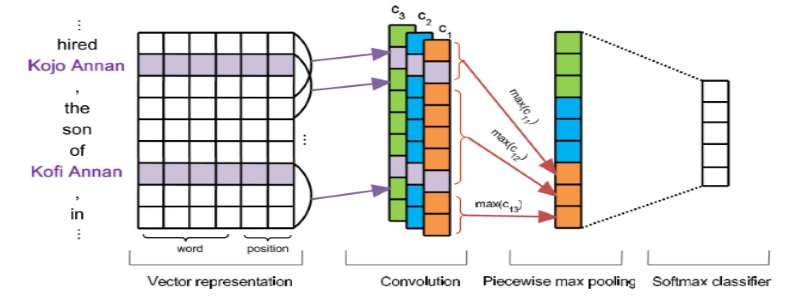

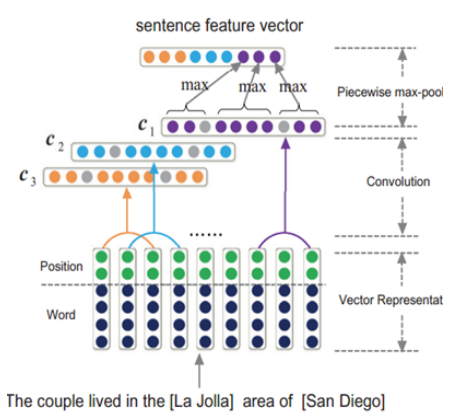

8 PCNN:使用远程监督可以自动标注训练样本,不再需要手动标注数据。 【Zeng D, Liu K, Chen Y, et al. Distant Supervision for Relation Extraction via Piecewise Convolutional Neural Networks[C]// Conference on Empirical Methods in Natural Language Processing. 2015:1753-1762. 】

9 APCNN+D:这篇文章的贡献主要在从KG中引入额外的实体描述信息,加强embedding的学习【Liu, K.; He, S.; and Zhao, J. 2017. Distant supervision for relation extraction with sentence-level attention and entity descriptions. In AAAI, 3060–3066. 】

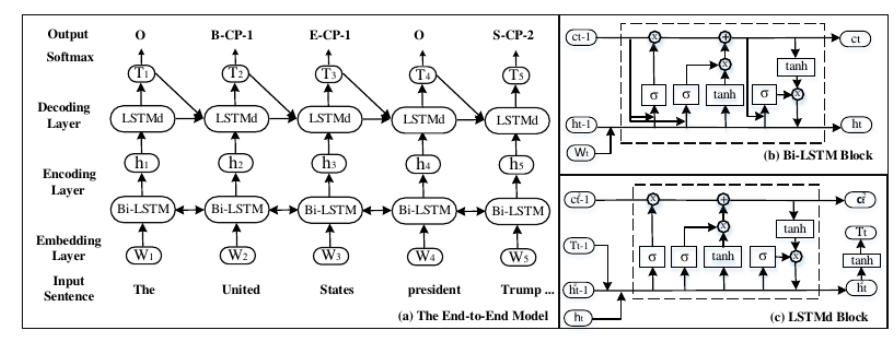

10 ACL2017 outstanding paper: 文章基于新的标注方法,研究了基于LSTM的end-to-end模型来解决联合抽取实体和关系的任务【Zheng S, Wang F, Bao H, et al. Joint Extraction of Entities and Relations Based on a Novel Tagging Scheme[J]. 2017:1227-1236. 】

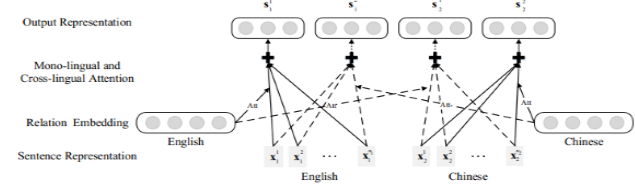

11 MNRE : 该文章提出了多语言Attention关系提取方法,以考虑多种语言之间的模式一致性和互补性。 结果表明,其模型可以有效地建立语言之间的关系模式,实现很好地效果。【Lin Y, Liu Z, Sun M. Neural Relation Extraction with Multi-lingual Attention[C]// Meeting of the Association for Computational Linguistics. 2017:34-43. 】

参考文章: 基于深度学习的关系抽取