RNN的前向传播

16 Mar 2018 | deep-learning |循环神经网络(RNN)与普通的神经网络相比,循环神经网络能够记住上一次的信息,就是在隐藏层输入的时候,不仅仅接受来自与x的输入,还接受来自于上一次隐藏层的值。

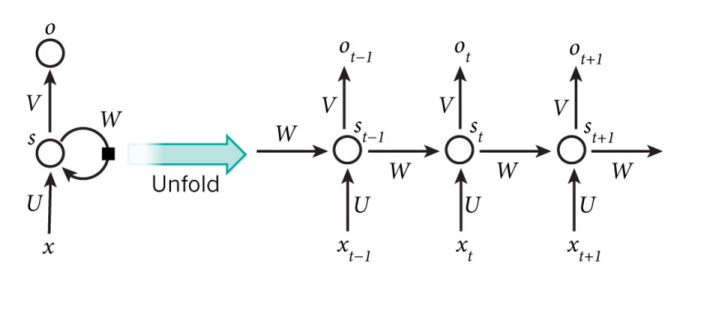

常见的RNN示意图:

其中的U是输入与隐藏层之间的权重矩阵,W是隐藏层以隐藏层之间的权重矩阵,V是隐藏层与输出层之间的权重矩阵。

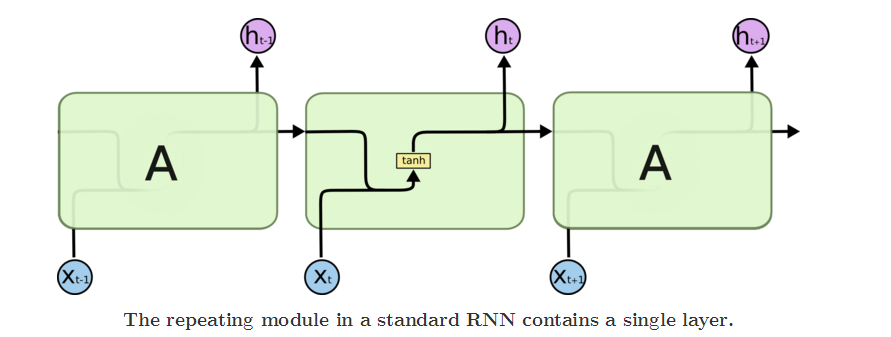

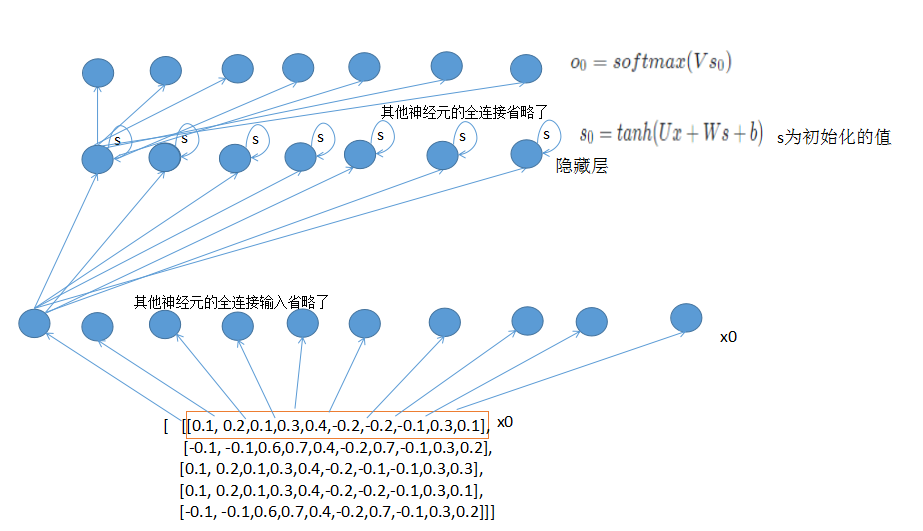

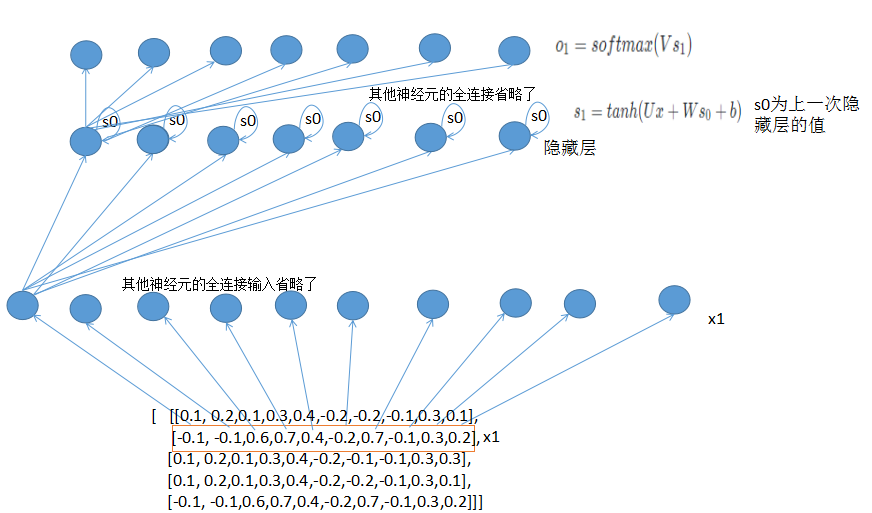

一个标准的RNN模型如图所示:

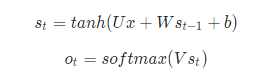

主要计算入下:

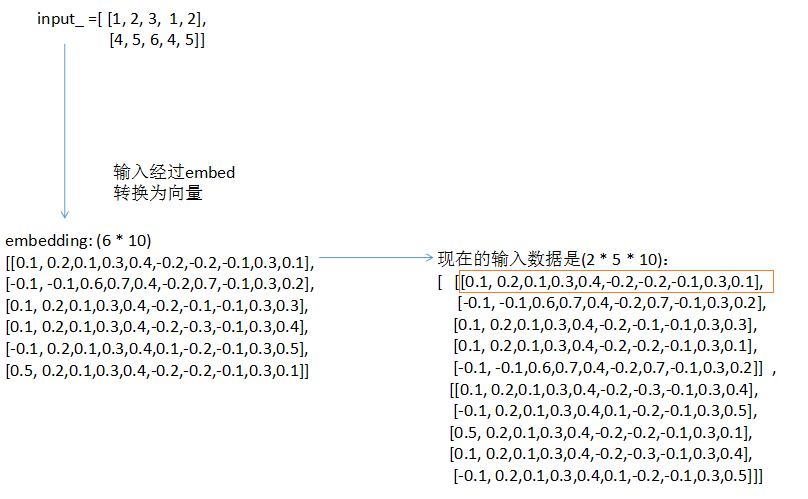

为了更好的理解执行过程,我画了一个RNN前向传播的流程执行图。首先输入经过嵌入层embed,然后再经过隐藏层,隐藏层输入当前的状态值s_t,然后再经过softmax输出。

RNN前向传播的代码:

def forward_propagation(self, x):

# The total number of time steps

T = len(x) #输入的总长度

# During forward propagation we save all hidden states in s because need them later.

# We add one additional element for the initial hidden, which we set to 0

s = np.zeros((T + 1, self.hidden_dim))

s[-1] = np.zeros(self.hidden_dim)#初始化隐藏层的值,一般为0

# The outputs at each time step. Again, we save them for later.

o = np.zeros((T, self.word_dim))

# For each time step...

for t in np.arange(T):

# Note that we are indxing U by x[t]. This is the same as multiplying U with a one-hot vector.

s[t] = np.tanh(self.U[:,x[t]] + self.W.dot(s[t-1]))#每次隐藏层的输入包括当前x的输入和上一次隐藏层的值

o[t] = softmax(self.V.dot(s[t]))#隐藏层经过全连接和softmax层后输出

return [o, s]

更好的博文请参考:

Recurrent Neural Networks Tutorial, Part 2 – Implementing a RNN with Python, Numpy and Theano

The Unreasonable Effectiveness of Recurrent Neural Networks

上面的文章写的真的很棒哦~